PNMLR: Enhancing Route Recommendations With Personalized Preferences Using Graph Attention Networks

In the modern landscape, millions of users rely on mapping services daily to plan trips of various kinds. While these services traditionally focus on providing the shortest routes, individual preferences often influence real-world route choices. However, most existing solutions neglect the impact of such preferences, limiting their ability to deliver truly personalized recommendations. This paper presents the Personalized Neuro-MLR (PNMLR) model, which enhances route recommendations by embedding user-specific preferences into the prediction process. Built on the Neuro-MLR (NMLR) framework, PNMLR leverages Graph Attention Networks (GAT) to integrate factors like user ID, time of day, and transport mode. This approach captures the variability in user behavior, offering personalized predictions for the most likely route. Extensive experiments on the Geolife GPS dataset show significant improvements across key metrics, including F1-score, precision, recall and route reachability, compared to models that do not consider user preferences. These results demonstrate the potential of PNMLR to transform route recommendation systems from generic solutions to user-centric models.

Methodology

Data preprocessing

We conducted extensive preprocessing of the Geolife GPS dataset through the following steps:

- Outliers Removal: Filtering GPS points that deviate significantly from plausible travel paths using a speed threshold of 500 km/h.

- Staypoints and Duplicates Removal: Eliminating redundant data points where users remain stationary, using a distance threshold of 5 meters.

- Compression: Merging points within 100 meters of each other to reduce data dimensionality while preserving trajectory shapes.

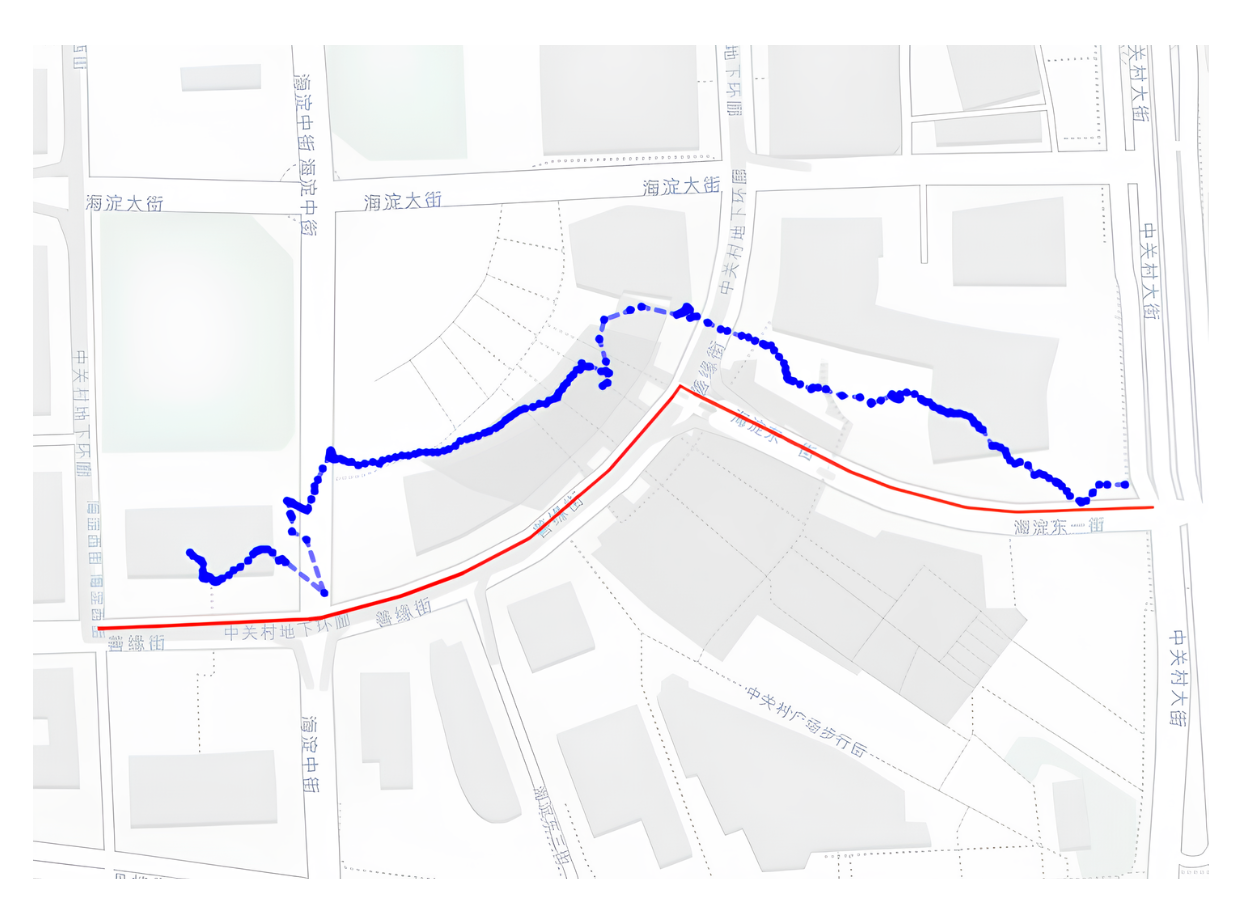

- Map Matching: Projecting GPS points onto a road network graph of Beijing using OpenStreetMap, ensuring continuous and valid paths.

- Normalization: Adjusting trajectory lengths to ensure comparability with baseline models.

Building Dynamic Node Representations

We extend the standard Graph Convolutional Network (GCN) by integrating Graph Attention Networks (GATs). Unlike GCNs, which use fixed weights for aggregating information from neighboring nodes, GATs employ a self-attention mechanism to learn and assign different weights to each neighboring node during training.

The attention scores between nodes are computed as:

These scores are then normalized using softmax and used to weight the importance of neighboring nodes when updating representations. This dynamic weighting allows PNMLR to adapt to different users and contexts, capturing subtle variations in routing preferences.

Incorporating User Preferences

Our model incorporates three key user preferences:

- User ID: Captures individual routing patterns and preferences that persist across multiple trips.

- Transport Mode: Considers different routing behaviors based on transportation method (walking, driving, bus) encoded as categorical variables.

- Time Features: Accounts for temporal variations using cyclical encoding of month, day, and hour information to preserve their periodic nature.

For each preference category, we create a k-dimensional embedding vector. These vectors are then processed through two alternative pipelines:

- Concatenation: Combining the three k-dimensional vectors into a single larger vector.

- Averaging: Element-wise averaging to produce a single k-dimensional vector.

The aggregated preferences are then passed through an MLP block (either single-layer or three-layer) to create the final m-dimensional user preference embedding (). Our experiments showed that concatenation combined with a three-layer MLP produced the best results.

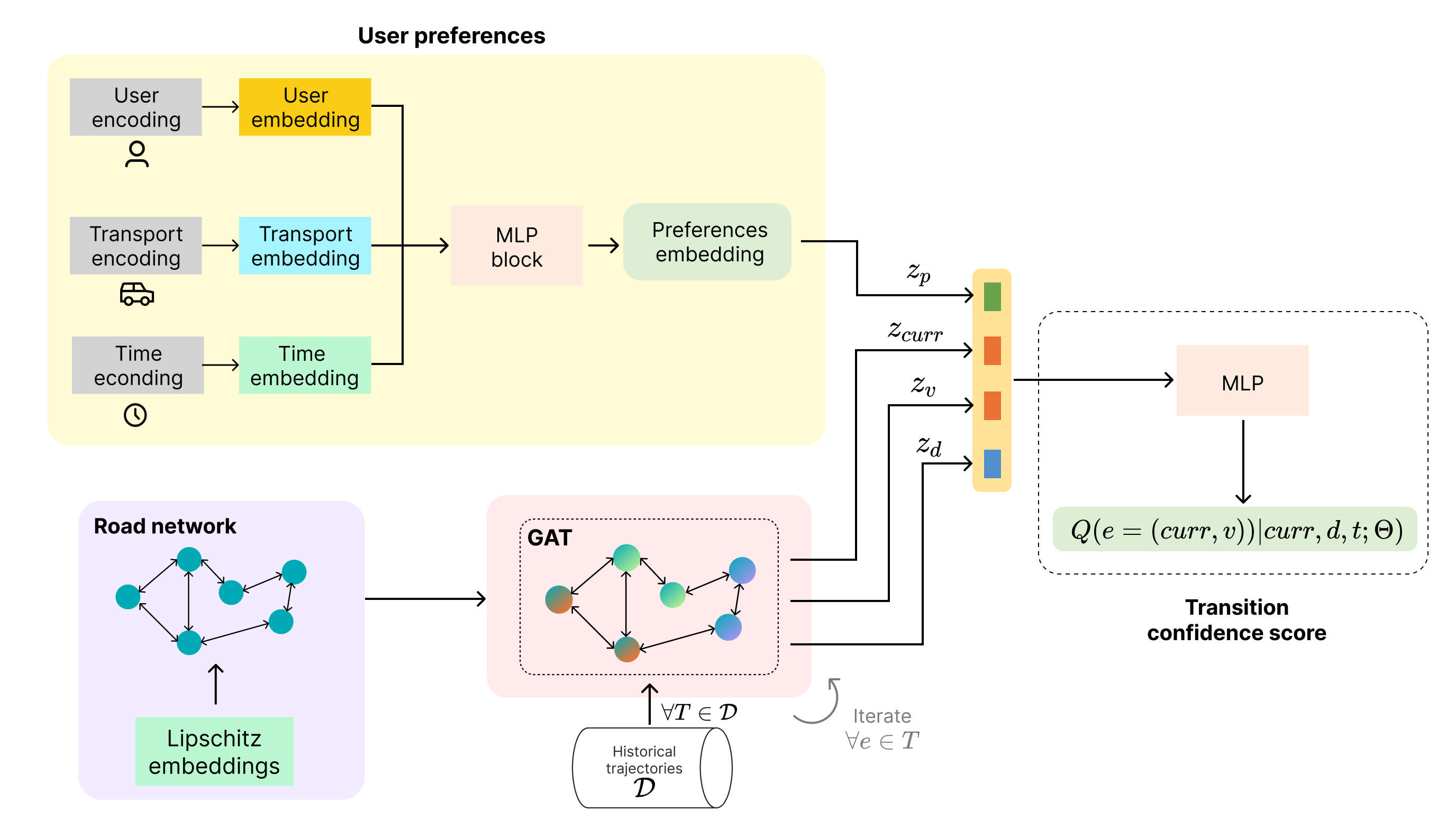

Complete Architecture and Training

The complete PNMLR architecture combines several key components:

- A Graph Attention Network that processes the road network to create node embeddings.

- A Preference Embedding Pipeline that processes user ID, transport mode, and time data.

- A Concatenation Module that combines the current node embedding (), transition node embedding (), destination node embedding (), and preference embedding ().

- A final MLP that predicts transition probabilities based on the concatenated embeddings.

Route prediction

During inference, given a query containing source, destination, and user preferences, the model predicts transitions from the current node to neighboring nodes based on the learned representations. The system follows a greedy approach, iteratively selecting the most likely transitions until the destination is reached or a maximum path length is reached.

Results

Our experiments on the Geolife GPS dataset demonstrate that PNMLR significantly outperforms baseline models that do not consider user preferences:

- F1-score: 78.49% (+17.9% improvement over NeuroMLR)

- Precision: 83.11% (+12.7% improvement)

- Recall: 74.36% (+12.9% improvement)

- Reachability: 75.39% (+21.9% improvement)

The most significant improvements were observed when using the Graph Attention Network variant with concatenation for preference aggregation. This confirms our hypothesis that user preferences play a crucial role in route selection and that attention mechanisms can effectively capture these personalized patterns.

BibTeX

@article{ponzi2025pnmlr,

title={PNMLR: Enhancing Route Recommendations with Personalized Preferences Using Graph Attention Networks},

author={Ponzi, Valerio and Comito, Ludovico and Napoli, Christian},

journal={IEEE Access},

volume={11},

year={2025},

doi={10.1109/ACCESS.2025.3555049},

publisher={IEEE}

}